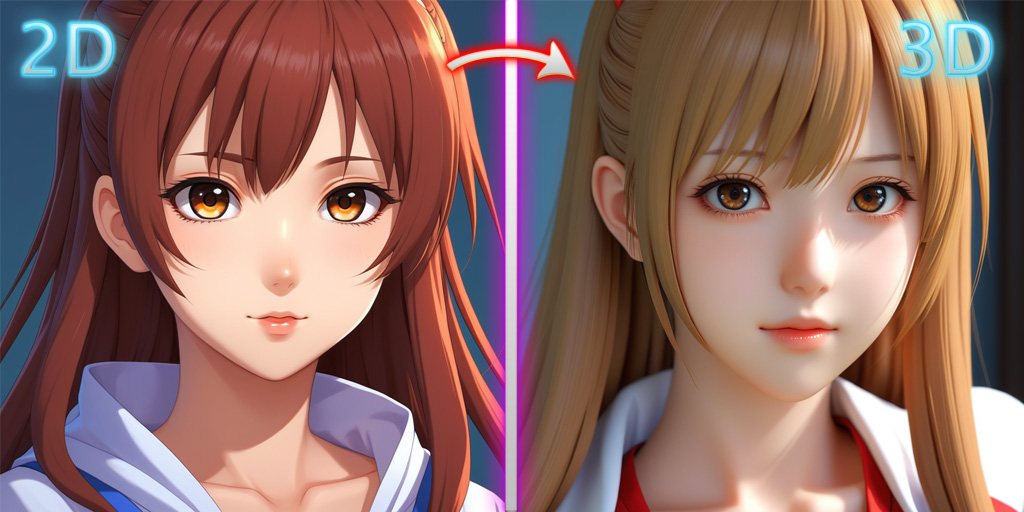

Can AI Create 3D Models from 2D Images? A Revolution for Digital Design

Imagine taking a simple photo of an object and, moments later, obtaining a fully functional 3D model ready to be used in a video game, a design project, or an animation. Science fiction? No, it’s the reality that Artificial Intelligence (AI) is making possible. But how does this work? What technologies and tools are involved? And most importantly, what are the limitations and future potential? In this article, we will explore these questions and provide reliable sources for further reading.

How Does 3D Model Creation from 2D Images Work?

Creating a 3D model from a 2D image relies on a process known as 3D reconstruction. Here are the main steps:

Image analysis: AI analyzes the image to extract information about edges, textures, and lighting.

Depth prediction: Using depth estimation algorithms, AI determines which parts of the image are closer or farther from the "camera."

Mesh creation: Once depth is calculated, a 3D mesh is generated to represent the object’s geometric structure.

Texture application: The original textures and colors are applied to the 3D model to make it realistic.

Tools and Technologies Available

Several tools and libraries enable the transformation of 2D images into 3D models. Here are some examples:

1. Kaedim

A service that uses AI to generate 3D models from 2D sketches or images. Kaedim is particularly valued for its speed and ease of use, ideal for designers and game developers.

Official website: Kaedim

2. NVIDIA Instant NeRF

This NVIDIA technology uses Neural Radiance Fields (NeRF) to create 3D volumetric representations from one or more 2D images. Instant NeRF stands out for the high quality of the details generated.

Learn more: NVIDIA Instant NeRF

3. DreamFusion by Google

DreamFusion combines advanced neural networks to generate detailed and realistic 3D models. This technology is ideal for creating 3D content from single images or textual descriptions.

Official article: DreamFusion

4. Blender with AI Plugins

Blender, a well-known open-source software for 3D modeling, offers plugins that integrate AI algorithms to transform 2D images into 3D models directly within the workflow.

Official website: Blender

Practical Applications

The ability to generate 3D models from 2D images significantly impacts various industries:

Gaming: Creation of 3D assets for video games in record time.

Animation: Conversion of conceptual drawings into animatable models.

Architecture: Transformation of 2D floor plans into realistic 3D visualizations.

E-commerce: Generation of 3D models for interactive product catalogs.

Limitations and Challenges

Despite its potential, there are still some limitations to consider:

Image quality: Low-quality 2D images can result in inaccurate 3D models.

Single-view challenges: Creating an accurate 3D model from a single image is more difficult than using multiple angles of the same object.

Complex details: Objects with intricate geometries may not be represented accurately.

The Future of 3D Reconstruction

As artificial intelligence advances, we can expect significant improvements in the accuracy and speed of 3D reconstruction. Technologies like evolved NeRFs and multimodal machine learning algorithms promise to make this functionality even more accessible and versatile.

The ability of AI to transform 2D images into 3D models represents a revolution for the world of design, gaming, and beyond. While there are still challenges to address, progress is unstoppable, and we are only at the beginning of what this technology can offer.

If you want to learn more, we encourage you to explore the provided links and, why not, test one of these tools to discover firsthand the potential of AI in the 3D world!

Leave a Comment